Tyler Cowen talks to Matt Yglesias about The Great Stagnation . . . . Here was my book review – “Tyler Cowen’s Techno Slump.”

Caveats. Already!

If it’s true, as Nick Schulz notes, that FCC Commissioner Copps and others really think Chairman Genachowski’s proposal today “is the beginning . . . not the end,” then all bets are off. The whole point is to relieve the overhanging regulatory threat so we can all move forward. More — much more, I suspect — to come . . . .

Killing the Master Switch

Adam Thierer nicely dissects a bunch of really sloppy arguments by Tim Wu, author of a new book on information industries called The Master Switch. (Scroll down to the comments section.)

Libertarians do NOT believe everything will be all sunshine and roses in a truly free marketplace. There will indeed be short term spells of what many of us would regard as excessive market power. The difference between us comes down to the amount of faith we would place in government actors versus market forces / evolution to better solve that problem. Libertarians would obviously have a lot more patience with markets and technological change, and would be willing to wait and see how things work out. We believe, as I have noted in my previous responses, that it’s often during what critics regard as a market’s darkest hour that innovation is producing some of the most exciting technologies with the greatest potential to disrupt the incumbents whose “master switch” you fear. Again, we are simply more bullish on what I have called experimental, evolutionary dynamism. Innovators and entrepreneurs don’t sit still; they respond to incentives, and for them, short-term spells of “market power” are golden opportunities. Ultimately, that organic, bottom-up approach to addressing “market power” or “market failure” simply makes a lot more sense to us – especially because it lacks the coercive element that your approach would bring to bear preemptively to solve such problems.

For Adam’s comprehensive six-part review of the book, go here.

The End of Net Neutrality?

In what may be the final round of comments in the Federal Communications Commission’s Net Neutrality inquiry, I offered some closing thoughts, including:

- Does the U.S. really rank 15th — or even 26th — in the world in broadband? No.

- The U.S. generates and consumes substantially more IP traffic per Internet user and per capita than any other region of the world.

- Among individual nations, only South Korea generates significantly more IP traffic than the U.S. (Canada and the U.S. are equal.)

- U.S. wired and wireless broadband networks are among the world’s most advanced, and the U.S. Internet ecosystem is healthy and vibrant.

- Latency is increasingly important, as demonstrated by a young company called Spread Networks, which built a new optical fiber route from Chicago to New York to shave mere milliseconds off the existing fastest network offerings. This example shows the importance — and legitimacy — of “paid prioritization.”

- As we wrote: “One way to achieve better service is to deploy more capacity on certain links. But capacity is not always the problem. As Spread shows, another way to achieve better service is to build an entirely new 750-mile fiber route through mountains to minimize laser light delay. Or we might deploy a network of server caches that store non-realtime data closer to the end points of networks, as many Content Delivery Networks (CDNs) have done. But when we can’t build a new fiber route or store data — say, when we need to get real-time packets from point to pointover the existing network — yet another option might be to route packets more efficiently with sophisticated QoS technologies.”

- Exempting “wireless” from any Net Neutrality rules is necessary but not sufficient to protect robust service and innovation in the wireless arena.

- “The number of Wi-Fi and femtocell nodes will only continue to grow. It is important that they do, so that we might offload a substantial portion of traffic from our mobile cell sites and thus improve service for users in mobile environments. We will expect our wireless devices to achieve nearly the robustness and capacity of our wired devices. But for this to happen, our wireless and wired networks will often have to be integrated and optimized. Wireline backhaul — whether from the cell site or via a residential or office broadband connection — may require special prioritization to offset the inherent deficiencies of wireless. Already, wireline broadband companies are prioritizing femtocell traffic, and such practices will only grow. If such wireline prioritization is restricted, crucial new wireless connectivity and services could falter or slow.”

- The same goes for “specialized services,” which some suggest be exempted from new Net Neutrality regulations. Again, necessary but not sufficient.

- “Regulating the ‘basic’ Internet but not ‘specialized’ services will surely push most of the network and application innovation and investment into the unregulated sphere. A ‘specialized’ exemption, although far preferable to a Net Neutrality world without such an exemption, would tend to incentivize both CAS providers and ISPs service providers to target the ‘specialized’ category and thus shrink the scope of the ‘open Internet.’ In fact, although specialized services should and will exist, they often will interact with or be based on the ‘basic’ Internet. Finding demarcation lines will be difficult if not impossible. In a world of vast overlap, convergence, integration, and modularity, attempting to decide what is and is not ‘the Internet’ is probably futile and counterproductive. The very genius of the Internet is its ability to connect to, absorb, accommodate, and spawn new networks, applications and services. In a great compliment to its virtues, the definition of the Internet is constantly changing.”

Some good short reads

Scott Grannis on the “bond bubble” conundrum.

Thomas Cooley and Lee Ohanian on “Lessons from the Depression.”

Tim Carney on the real Republican divide.

Tech Nerds Talk

A good conversation between Harry McCracken of Technologizer and Bob Wright of bloggingheads.tv. Topics include Apple’s ascent (and world domination?); iPhone vs. Android; whither Microsoft; Facebook’s privacy flub; etc.

Quote of the Day

“My guess is that the euro will survive, but no one will trust it like they used to. At the end of the day, it’s an entitlement problem. In Greece, the public sector makes up 40% or more of the work force, with short weeks, lots of vacation and lavish retirement benefits. All of that needs to be paid for with real income, not debt, and the markets are anticipating the day of reckoning. One can only hope European policy makers listen to the market. I wonder if California and Medicare are taking notes.”

— Andy Kessler, May 8, 2010

China won’t repeat protectionist past in digital realm

See our new CircleID commentary on the China-Google dustup and its implications for an open Internet:

China is nowhere near closing for business as it did five centuries ago. One doubts, however, that the Ming emperor knew he was dooming his people for the next couple hundred years, depriving them of the goods and ideas of the coming Industrial Revolution. China’s present day leaders know this history. They know technology. They know turning away from global trade and communication would doom them far more surely than would an open Internet.

Did Phil and Tiger lead to Akamai’s record 3.45 terabit day?

Akamai announced a record peak in traffic volume on its content delivery network on April 9.

In addition to reaching a milestone for peak traffic served this past Friday, the Akamai network also hit a new peak during the same day for video streaming, as well as a near high for total requests served.

- With online interest in major sporting events – including professional golf and baseball – helping to drive the surge in demand, Akamai delivered its largest ever traffic for high definition video streaming.

- Over the course of the day, Akamai logged over 500 billion requests for content, a sum equal to serving content to every human once every 20 minutes

- At peak, Akamai supported over 12 million requests per second – a rate roughly equivalent to serving content to the entire population of the United States every 30 seconds.

Climate Detective Gets His Mann

If you really want to understand the climate debate, you simply must read this book, by A.W. Montford, about a Canadian scientific detective named Steve McIntyre, who humbly but doggedly pursued the truth about the 1,000-year temperature reconstructions that generated the famed “hockey stick.”

If you really want to understand the climate debate, you simply must read this book, by A.W. Montford, about a Canadian scientific detective named Steve McIntyre, who humbly but doggedly pursued the truth about the 1,000-year temperature reconstructions that generated the famed “hockey stick.”

The November 2009 email “hack” of Britain’s Climatic Research Unit that has generated so much recent news is only a brief epilogue. The real story happened day by day over the last decade as McIntyre, a retired mining engineer, and a his fellow Canadian Ross McKitrick, an economist, searched for, and then through, shabbily constructed data sets and magical algorithms, with surprising finds on almost every page.

As my friend George Gilder wrote:

The reader should know that the supposed email “scandal,” as described in the book, is in fact a rather trivial and even defensible part of the story. Few people are at their best in emails. What is shocking — and I use the word advisedly as a confirmed sceptic not easily shocked — is the so called science. I never imagined that it was quite this bad. It is shoddy beyond easy belief.

The hockey stick chart mostly reflects a defective algorithm that extends and inflates a few deceptive signals from as few as 20 cherry-picked trees in Colorado and Russia into a hockey stick chart that is replicated repeatedly through reshuffles of the same or similar defective and factitious data to capture and define two thousand years of climate history. These people simply had no plausible case and were pressed by their political sponsors to contrive a series of Potemkin charts.

Almost, but not quite, as surprising, was Montford’s narrative itself. Somehow he turned an esoteric battle over statistical methodology into a captivating “what happens next” mystery. British science writer Matt Ridley agreed:

Montford’s book is written with grace and flair. Like all the best science writers, he knows that the secret is not to leave out the details (because this just results in platitudes and leaps of faith), but rather to make the details delicious, even to the most unmathematical reader. I never thought I would find myself unable to put a book down because — sad, but true — I wanted to know what happened next in an r-squared calculation. This book deserves to win prizes.

Engrossing. Astonishing. Devastating.

This Year’s Office Pool

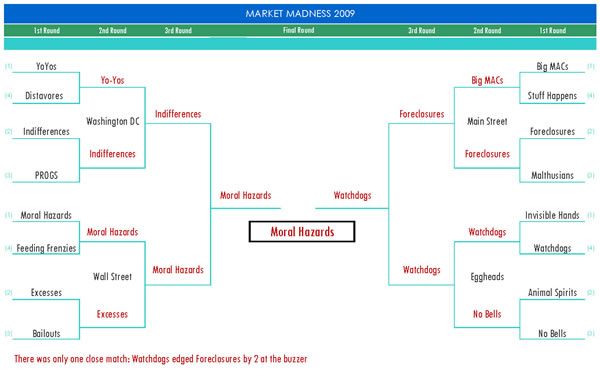

The Yo-Yos versus the Distavores. The HurriKeynes versus the Invisible Hands. And the team with more Monetary Madness appearances than any other — Stuff Happens. This was the scientific bracketology that determined the real cause of the Great Panic at the American Economic Association’s recent meetings:

(hat tip: David Warsh)

Quote of the Day

“…commercial real estate loans should not be marked down because the collateral value has declined. It depends on the income from the property, not the collateral value.”

— Ben Bernanke, Feb. 24, 2009, finally, if tamely, acknowledging the crucial role of mark-to-market accounting in the financial death spiral.

(via Brian Wesbury)

Did the FCC get the White House jobs memo?

That’s the question I ask in this Huffington Post article today.

The Crucial But Unknown Cause

Among all the books, articles, and academic papers analyzing the financial meltdown, very few have pinpointed and exposed what I think was the accelerant that turned a problem into an all-out panic: namely, the zealous application of mark-to-market accounting beginning in the autumn of 2007. In this video, two of these very few — Brian Wesbury and Steve Forbes — discuss the meltdown, mark-to-market’s crucial role, and the stock market’s short and mid-term prospects. Wesbury and Forbes have also written two great books explaining the Great Panic, why it’s not as bad as you think, and how capitalism will save us.

Holman Jenkins today also picks up the theme of mark-to-market’s central role in the panic.

20 Good Questions

Wyoming wireless operator Brett Glass has 20 questions for the FCC on Net Neutrality. Some examples:

1. I operate a public Internet kiosk which, to protect its security and integrity, has no way for the user to insert or connect storage devices. The FCC’s policy statement says that a provider of Internet service must allow users to run applications of their choice, which presumably includes uploading and downloading. Will I be penalized if I do not allow file uploads and downloads on that machine?

4. I operate a wireless hotspot in my coffeehouse. I block P2P traffic to prevent one user from ruining the experience for my other customers. Do the FCC rules say that I must stop doing this?

6. I am a cellular carrier who offers Internet services to users of cell phones. Due to spectrum limitations, multimedia streaming by more than a few users would consume all of the bandwidth we have available not only for data but also for voice calls. May we restrict these protocols to avoid running out of bandwidth and to avoid disruption to telephone calls (some of which may be E911 calls or other urgent traffic)?

7. I am a wireless ISP operating on unlicensed spectrum. Because the bands are crowded and spectrum is scarce, I must limit each user’s bandwidth and duty cycle. Rather than imposing hard limits or overage charges, I would like to set an implicit limit by prohibiting P2P, with full disclosure that I am doing so. Is this permitted under the FCC’s rules?

14. I am an ISP that accelerates users’ Web browsing by rerouting requests for Web pages to a Web cache (a device which speeds up Web browsing, conceived by the same people who developed the World Wide Web) and then to special Internet connections which are asymmetrical (that is, they have more downstream bandwidth than upstream bandwidth). The result is faster and more economical Web browsing for our users. Will the FCC say that our network “discriminates” by handling Web traffic in this special way to improve users’ experience?

15. We are an ISP that improves the quality of VoIP by prioritizing VoIP packets and sending them through a different Internet connection than other traffic. This technique prevents users from experiencing problems with their telephone conversations and ensures that emergency calls will get through. Is this a violation of the FCC’s rules?

18. We’re an ISP that serves several large law offices as well as other customers. We are thinking of renting a direct “fast pipe” to a legal research database to shorten the attorneys’ response times when they search the database. Would accelerating just this traffic for the benefit of these customers be considered “discrimination?”

19. We’re a wireless ISP. Most of our customers are connected to us using “point-to-multipoint” radios; that is, the customers’ connection share a single antenna at our end. However, some high volume customers ask to buy dedicated point-to-point connections to get better performance. Do these connections, which are engineered by virtually all wireless ISPs for high bandwidth customers, run afoul of the FCC’s rules against “discrimination?”

Managing Internet Abundance

See our new commentary at CircleID:

The Internet has two billion global users, and the developing world is just hitting its growth phase. Mobile data traffic is doubling every year, and soon all four billion mobile phones will access the Net. In 2008, according to a new UC-San Diego study, Americans consumed over 3,600 exabytes of information, or an average of 34 gigabytes per person per day. Microsoft researchers argue in a new book, “The Fourth Paradigm,” that an “exaflood” of real-world and experimental data is changing the very nature of science itself. We need completely new strategies, they write, to “capture, curate, and analyze” these unimaginably large waves of information.

As the Internet expands, deepens, and thrives—growing in complexity and importance—managing this dynamic arena becomes an ever bigger challenge. Iran severs access to Twitter and Gmail. China dramatically restricts individual access to new domain names. The U.S. considers new Net Neutrality regulation. Global bureaucrats seek new power to allocate the Internet address space. All the while, dangerous “botnets” roam the Web’s wild west. Before we grab, restrict, and possibly fragment a unified Web, however, we should stop and think. About the Internet’s pace of growth. About our mostly successful existing model. And about the security and stability of this supreme global resource.

Welcome to Title II, Sergey and Larry

Excellent analysis of Google’s plan to build a few experimental fiber networks from my former colleague Barbara Esbin:

NetworkWorld reports that by constructing its own fiber network, Google “is trying to push its vision for how the Internet as a whole should operate.” I wish the company all the success in the world with GoogleNet. Business model experimentation and new entry to the broadband Internet service provider market like this should be encouraged. If this “open access” common carrier network proves to be a viable business model that attracts both customers and followers, it will be a fabulous addition to the domestic Internet ecosystem. But this vision should not be turned into unnecessary government mandates for other Internet network operators who are similarly trying to experiment with their business models in this brave new digital world.

Surprisingly, I also agree with Harold Feld’s analysis:

the telecom world is all abuzz over the news that Google will build a bunch of Gigabit test-beds. I am perfectly happy to see Google want to drop big bucks into fiber test beds. I expect this will have impact on the broadband market in lots of ways, and Google will learn a lot of cool things that will help it make lots of money at its core business — organizing information and selling that service in lots of different ways to people who value it for different reasons. But Google no more wants to be a wireline network operator than it wanted to be a wireless network operator back when it was willing to bid on C Block in the 700 MHz Auction.

So what does Google want? As I noted then: “Google does not want to be a network operator, but it wants to be a network architect.” Oh, it may end up running networks. Google has a history of stepping up to do things that further its core business when no one else wants to step up, as witnessed most recently by their submitting a bid to serve as the database manager for the broadcast white spaces devices. But what it actually wants to do is modify the behavior of the platforms on which it rides to better suit its needs. Happily, since those needs coincide with my needs, I don’t mind a bit.

I do mind.

.9 x 4,294,967,296 . . . and counting

We’ve been discussing the dramatic growth of the global Internet and the expansion of physical devices and virtual spaces that come with the mobile revolution, social networking, cloud computing, and the larger move of the Net into every business practice and cultural nook.

Last week ICANN, the organization that administers the Internet’s domain space, announced that fewer than 10% of current-generation Internet addresses (IPv4) remain unallocated. In any network realm, a move above 90% capacity is an alarm bell that needs attention. IPv6 is the next generation address space and is being deployed. But the move needs to accelerate to ensure the unabated growth of the Net.

Developed in the 1990s, IPv6 has been available for allocation to ISPs since 1999. An increasing number of ISPs have been deploying IPv6 over the past decade, as have governments and businesses. The biggest attraction of IPv6 is the enormous address space it provides. Instead of just 4 billion IPv4 addresses – fewer than the number of people on the planet – there are 340,282,366,920,938,463,463,374,607,431,768,211,456 IPv6 addresses. An easier way to think of this number is 340 trillion trillion trillion addresses.

Or, the famous comparison: If IPv4 is a golf ball, IPv6 is the Sun.

What Would Net Neutrality Mean for U.S. Jobs?

See our new analysis of Net Neutrality regulation’s possible impact on the U.S. job market.

ExaTablet?

The Wall Street Journal‘s Digits blog asks, “Could Verizon Handle Apple Tablet Traffic?”

The tablet’s little brother, the iPhone, has already shown how an explosion in data usage can overload a network, in this case AT&T’s. And the iPhone is hardly the kind of data guzzler the tablet is widely expected to be. After all, it’s one thing to squint at movies on a 3.5-inch screen and quite another to watch them in relatively cinematic 10 inches.

“Clearly this is an issue that needs to be fixed,” says Broadpoint Amtech analyst Brian Marshall. “It can grind the networks to a halt.”