Yesterday’s Wall Street Journal story on the supposed softening of Google’s “net neutrality” policy stance, which I posted about here, predictably got all the nerds talking.

Here was my attempt, over at the Technology Liberation Front, to put this topic in perspective:

_______________________

Bandwidth, Storewidth, and Net Neutrality

Very happy to see the discussion over The Wall Street Journal‘s Google/net neutrality story. Always good to see holes poked and the truth set free.

But let’s not allow the eruptions, backlashes, recriminations, and “debunkings” — This topic has been debunked. End of story. Over. Sit down! — obscure the still-fundamental issues. This is a terrific starting point for debate, not an end.

Content delivery networks (CDNs) and caching have always been a part of my analysis of the net neutrality debate. Here was testimony that George Gilder and I prepared for a Senate Commerce Committee hearing almost five years ago, in April 2004, where we predicted that a somewhat obscure new MCI “network layers” proposal, as it was then called, would be the next big communications policy issue. (At about the same time, my now-colleague Adam Thierer was also identifying this as an emerging issue/threat.)

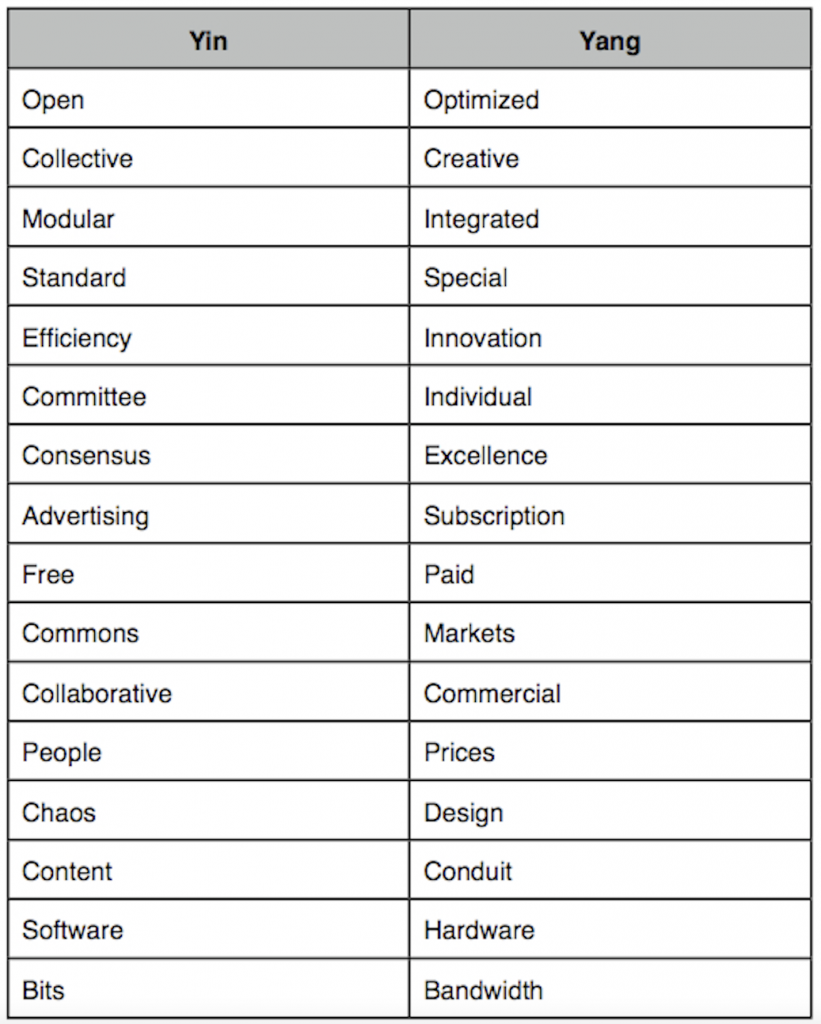

Gilder and I tried to make the point that this “layers” — or network neutrality — proposal would, even if attractive in theory, be very difficult to define or implement. Networks are a dynamic realm of ever-shifting bottlenecks, where bandwidth, storage, caching, and peering, in the core, edge, and access, in the data center, on end-user devices, from the heavens and under the seas, constantly require new architectures, upgrades, and investments, thus triggering further cascades of hardware, software, and protocol changes elsewhere in this growing global web. It seemed to us at the time, ill-defined as it was, that this new policy proposal was probably a weapon for one group of Internet companies, with one type of business model, to bludgeon another set of Internet companies with a different business model.

We wrote extensively about storage, caching, and content delivery networks in the pages of the Gilder Technology Report, first laying out the big conceptual issues in a 1999 article, “The Antediluvian Paradigm.” [Correction: “The Post-Diluvian Paradigm”] Gilder coined a word for this nexus of storage and bandwidth: Storewidth. Gilder and I even hosted a conference, also dubbed “Storewidth,” dedicated to these storage, memory, and content delivery network technologies. See, for instance, this press release for the 2001 conference with all the big players in the field, including Akamai, EMC, Network Appliance, Mirror Image, and one Eric Schmidt, chief executive officer of . . . Novell. In 2002, Google’s Larry Page spoke, as did Jay Adelson, founder of the big data-center-network-peering company Equinix, Yahoo!, and many of the big network and content companies. (more…)