Over the July 4 weekend, relatives and friends kept asking me: Which mobile phone should I buy? There are so many choices.

I told them I love my iPhone, but all kinds of new devices from BlackBerries and Samsungs to Palm’s new Pre make strong showings, and the less well-known HTC, one of the biggest innovators of the last couple years, is churning out cool phones across the price-point and capability spectrum. Several days before, on Wednesday, July 1, I had made a mid-afternoon stop at the local Apple store. It was packed. A short line formed at the entrance where a salesperson was taking names on a clipboard. After 15 minutes of browsing, it was my turn to talk to a salesman, and I asked: “Why is the store so crowded? Some special event?”

“Nope,” he answered. “This is pretty normal for a Wednesday afternoon, especially since the iPhone 3G S release.”

So, to set the scene: The retail stores of Apple Inc., a company not even in the mobile phone business two short years ago, are jammed with people craving iPhones and other networked computing devices. And competing choices from a dozen other major mobile device companies are proliferating and leapfrogging each other technologically so fast as to give consumers headaches.

But amid this avalanche of innovative alternatives, we hear today that:

The Department of Justice has begun looking into whether large U.S. telecommunications companies such as AT&T Inc. and Verizon Communications Inc. are abusing the market power they have amassed in recent years . . . .

. . . The review is expected to cover all areas from land-line voice and broadband service to wireless.

One area that might be explored is whether big wireless carriers are hurting smaller rivals by locking up popular phones through exclusive agreements with handset makers. Lawmakers and regulators have raised questions about deals such as AT&T’s exclusive right to provide service for Apple Inc.’s iPhone in the U.S. . . .

The department also may review whether telecom carriers are unduly restricting the types of services other companies can offer on their networks . . . .

On what planet are these Justice Department lawyers living?

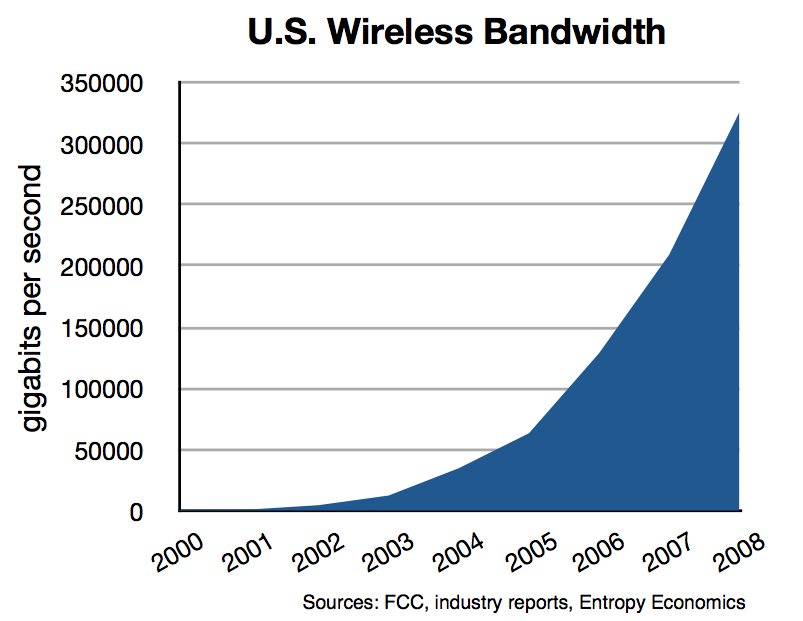

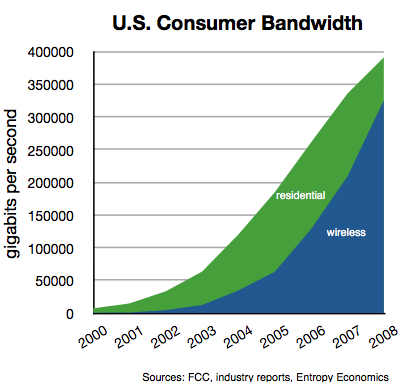

Most certainly not the planet where consumer wireless bandwidth rocketed by a factor of 542 (or 54,200%) over the last eight years. The chart below, taken from our new research, shows that by 2008, U.S. consumer wireless bandwidth — a good proxy for the power of the average citizen to communicate using mobile devices — grew to 325 terabits per second from just 600 gigabits per second in 2000. This 500-fold bandwidth expansion enabled true mobile computing, changed industries and cultures, and connected billions across the globe. Perhaps the biggest winners in this wireless boom were low-income Americans, and their counterparts worldwide, who gained access to the Internet’s riches for the first time.

Meanwhile, Sen. Herb Kohl of Wisconsin is egging on Justice and the FCC with a long letter full of complaints right out of the 1950s. He warns of consolidation and stagnation in the dynamic, splintering communications sector; of dangerous exclusive handset deals even as mobile computers are perhaps the world’s leading example of innovative diversity; and of rising prices as communications costs plummet.

Kohl cautioned in particular that text message prices are rising and could severely hurt wireless consumers. But this complaint does not square with the numbers: the top two U.S. mobile phone carriers now transmit more than 200 billion text messages per calendar quarter.

It’s clear: consumers love paid text messaging despite similar applications like email, Skype calling, and instant messaging (IM, or chat) that are mostly free. A couple weeks ago I was asking a family babysitter about the latest teenage trends in text messaging and mobile devices, and I noted that I’d just seen highlights on SportsCenter of the National Texting Championship. Yes, you heard right. A 15 year old girl from Iowa, who had only been texting for eight months, won the speed texting contest and a prize of $50,000. I told the babysitter that ESPN reported this young Iowan used a crazy sounding 14,000 texts per month. “Wow, that’s a lot,” the babysitter said. “I only do 8,000 a month.”

I laughed. Only eight thousand.

In any case, Sen. Kohl’s complaint of a supposed rise in per text message pricing from $.10 to $.20 is mostly irrelevant. Few people pay these per text prices. A quick scan of the latest plans of one carrier, AT&T, shows three offerings: 200 texts for $5.00; 1500 texts for $15.00; or unlimited texts for $20. These plans correspond to per text prices, respectively, of 2.5 cents, 1 cent, and, in the case of our 8,000 text teen, just .25 cents. Not anywhere close to 20 cents.

The criticism of exclusive handset deals — like the one between AT&T and Apple’s iPhone or Sprint and Palm’s new Pre — is bizarre. Apple wasn’t even in the mobile business two years ago. And after its Treo success several years ago, Palm, originally a maker of PDAs (remember those?), had fallen far behind. Remember, too, that RIM’s popular BlackBerry devices were, until recently, just email machines. Then there is Amazon, who created a whole new business and publishing model with its wireless Kindle book- and Web-reader that runs on the Sprint mobile network. These four companies made cooperative deals with service providers to help them launch risky products into an intensely competitive market with longtime global standouts like Nokia, Motorola, Samsung, LG, Sanyo, SonyEricsson, and others.

As The Wall Street Journal noted today:

More than 30 devices have been introduced to compete with the iPhone since its debut in 2007. The fact that one carrier has an exclusive has forced other companies to find partners and innovate. In response, the price of the iPhone has steadily fallen. The earliest iPhones cost more than $500; last month, Apple introduced a $99 model.

If this is a market malfunction, let’s have more of them. Isn’t Washington busy enough re-ordering the rest of the economy?

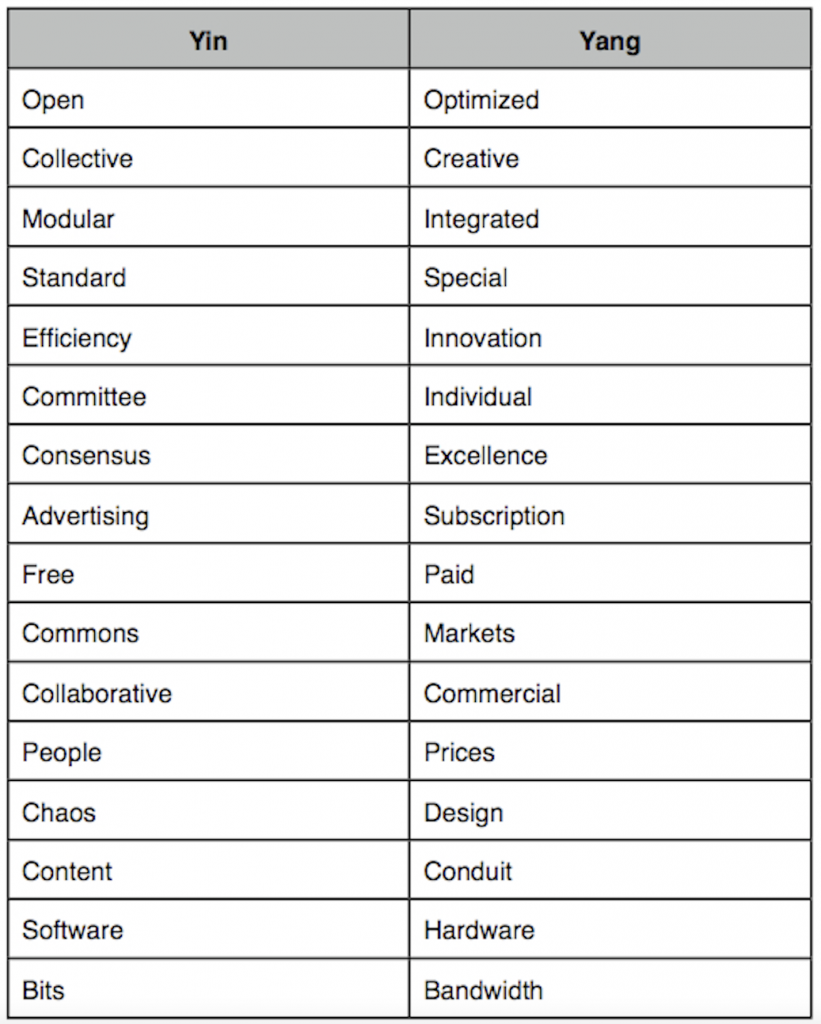

These new devices, with their high-resolution screens, fast processors, and substantial 3G mobile and Wi-Fi connections to the cloud have launched a new era in Web computing. The iPhone now boasts more than 50,000 applications, mostly written by third-party developers and downloadable in seconds. Far from closing off consumer choice, the mobile phone business has never been remotely as open, modular, and dynamic.

There is no reason why 260 million U.S. mobile customers should be blocked from this onslaught of innovation in a futile attempt to protect a few small wireless service providers who might not — at this very moment — have access to every new device in the world, but who will no doubt tomorrow be offering a range of similar devices that all far eclipse the most powerful and popular device from just a year or two ago.

— Bret Swanson